Quick nginx hack to save your production web server in case the web service starts crashing.

If you have been working in a production environment, you know at certain times your web service can crash due to some unknown reason (code failure). It will take some time to do the RCA and fix the issue. A quick hack is to have multiple web services running on different ports resp. that can take the load off if any of them goes down. You should anyways have this as a backup tactic for your service failure. DevOps generally use autoscaling at such times.

Note: This won’t work if there is data inconsistency, since all service will crash.

Steps

Here go the how-to steps:

Start the services

Start 3 processes of the web service on multiple ports (in below gist:

3000,3002,3004)Upstream conf

Add your upstream in nginx conf file (here

myserver):upstream myserver { server 127.0.0.1:3000 fail_timeout=0; server 127.0.0.1:3002 fail_timeout=0; server 127.0.0.1:3004 backup; }Here nginx and web services are running on the same box. If you are running your services on a different machine, you can replace

127.0.0.1with the machine’s IP.Two services (ones running on ports

3000and3002) to distribute the load and one (on3004) as a backup if either crash.Update path

In your location block, add the following code block in your nginx conf replacing

myroutewith your routemyserverwith your upstream name

location ~ ^/myroute/(.*) { proxy_next_upstream error timeout invalid_header http_502 http_503 http_504; proxy_pass <http://myserver>; }Read about

proxy_next_upstreamhere.Reload nginx

Run to reload the configuration:

sudo systemctl nginx reload

Extra

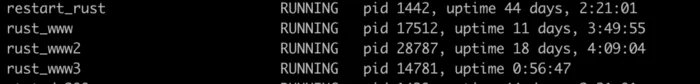

In my case, it was our rust web service which was getting stuck, failing all the subsequent requests. I created a health-check service that monitored and restarted the rust service whenever that happened. But I knew during this downtime period many of the requests would fail, we didn’t want that. So I added three processes of this service on different ports and proxied them the way shown above.